Control Your PC or Phone with your Eyes

For the longest time, we have used keyboards, or virtual keyboards to interact with our electronic devices. When graphical user interfaces were introduced, the mouse, or trackball became common. In the case of smartphones, the touch screen allowed the same type of interaction. Later, even voice control became possible. Who does not know Siri or Alexa from Apple and Amazon respectively? But what if we could even use our eyes to interact with our PC or phone?

The technology of using eyes to control a PC is not new. It is more that there have always been limitations with eye control. Cursor movement is relatively easy to translate, but what about a mouse click (or tap on the phone)? Performing actions on a computer is kind of essential for making eye control practical. Starting apps, pushing buttons, and selecting options, all require more than just movement.

Advantages of eye control

The advantages are actually kind of obvious. You don’t need to use your hand, which means you can use one hand to hold a phone. In the case of a computer, this is less applicable, although portable computers can also benefit from operating this way. Your other hand can be used for something else, while your eyes control the computer or phone.

A second advantage is that using your eyes is silent. Voice commands are great in themselves, but in an environment where there are noises or other people, using your voice might not be the best.

People who are physically disabled can use this technique if they can move their eyes, but not their limbs.

Compared to voice control, the benefit of eye control is that it is not language dependent. That makes that any eye tracking implementation can be used across the globe.

Drawbacks to eye control

The main drawback of eye control is the limited features supported by current solutions. Since functionality is limited, there is no purpose in using it.

The second drawback is the accuracy. Using your eyes to control something requires 1) calibration, and 2) practice. Calibration is used to link the eye movement to a cursor, or screen location. Although this is an initialization process, good implementations will keep on improving the accuracy based on usage. But even when calibrated properly, it takes some effort to become proficient in using your eyes for accurate control. Think of the speed of movement, and holding a fixed location when you want to click there.

How your head is positioned compared to the camera, whether or not you are waring glasses, movement of your head, this all affects the tracking accuracy.

Processing eye movement based on camera input also requires a relative high amount of CPU power. Compared to a mouse, trackball, or touch screen, that is not always practcal. There are some solutions with extra hardware which reduces the CPU requirement.

Implementations for controlling devices with your eyes

One of the first well-known solutions for eye control was Eye Tribe, which started in 2012. These days, there are many more solutions, some offer a program to install which controls the mouse. GazePointer is a good example of this. It offers a Windows program to control the mouse cursor. I was impressed with the immediate accuracy when testing the solution with a simple laptop webcam. But after calibrating, the practical usage is difficult because I am not used to eye control. You can easily check it our by downloading and installing on a Windows system (it does require .NET Framework version 3.5, so you might need to install that as well).

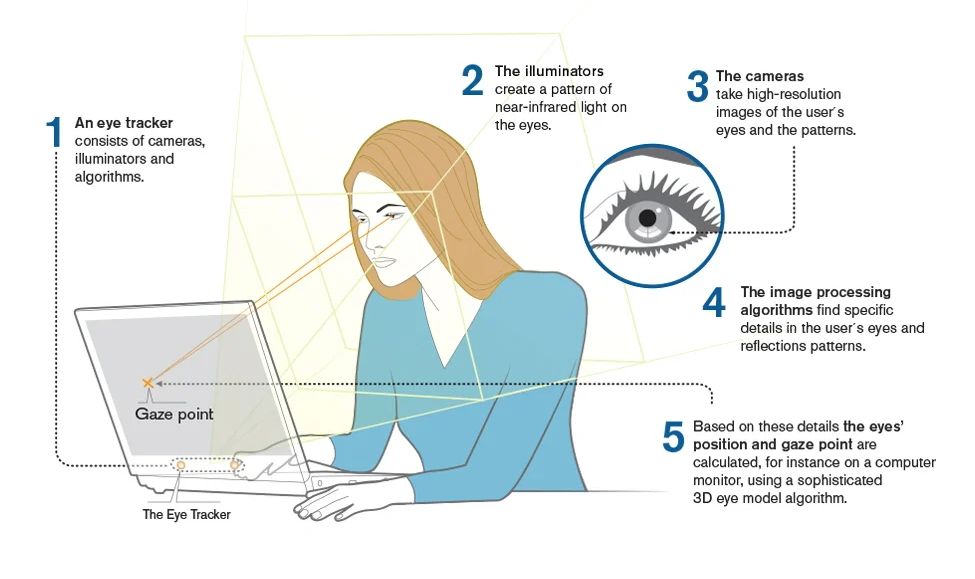

Tobii is another solution to eye tracking. Here is an illustration of how their solution works.

The Tobii devices are actually what is supported by Windows 10 and Windows 11 for eye control in Windows. Instructions on how to ue eye control in Windows can be foudn on the Microsoft support site.

Another solution available is WebGazer, a JavaScript library for eye tracking. These and other similar solutions result in the more practical use cases at the moment: checking what a user is looking at. This is great for testing various layouts, both for marketing and efficiency purposes. Advertiser love to know what you are looking at (first). WebGazer originates from research donw at the Brown University.

Gaze control on the phone

One of the more recent developments is by the Future Interfaces Group at Carnegie Mellon University. They are developing a tool called EyeMU. This solution tries to combine the gaze with motion sensors in a phone to offer accuracy and functionality. In the end, it is all about accuracy and functionality to make eye control useful.

The benefit of modern computers and smartphones is that they have sufficient processing power to make these types of solutions practical.

It will be interesting to see how far eye control will go in the future.

Thank you. It works.

@Fritz Liessling - Thank you for your feedback. I understand that you are looking for other resolutions, but within Windows…

Although the answer to this question is correct, it is really a cop-out answer. It's easy enough to find answers…

To the pcauthorities.com administrator, You always provide useful links and resources.

???? ?? ??? The Last of us